December 1, 2025

Introducing Lux, the State-of-the-Art Foundation Computer-Use Model

The world's best, fastest, and cheapest computer-use model is now available via SDK

We are happy to share that OpenAGI is coming out of stealth and releasing its first foundation computer model, Lux.

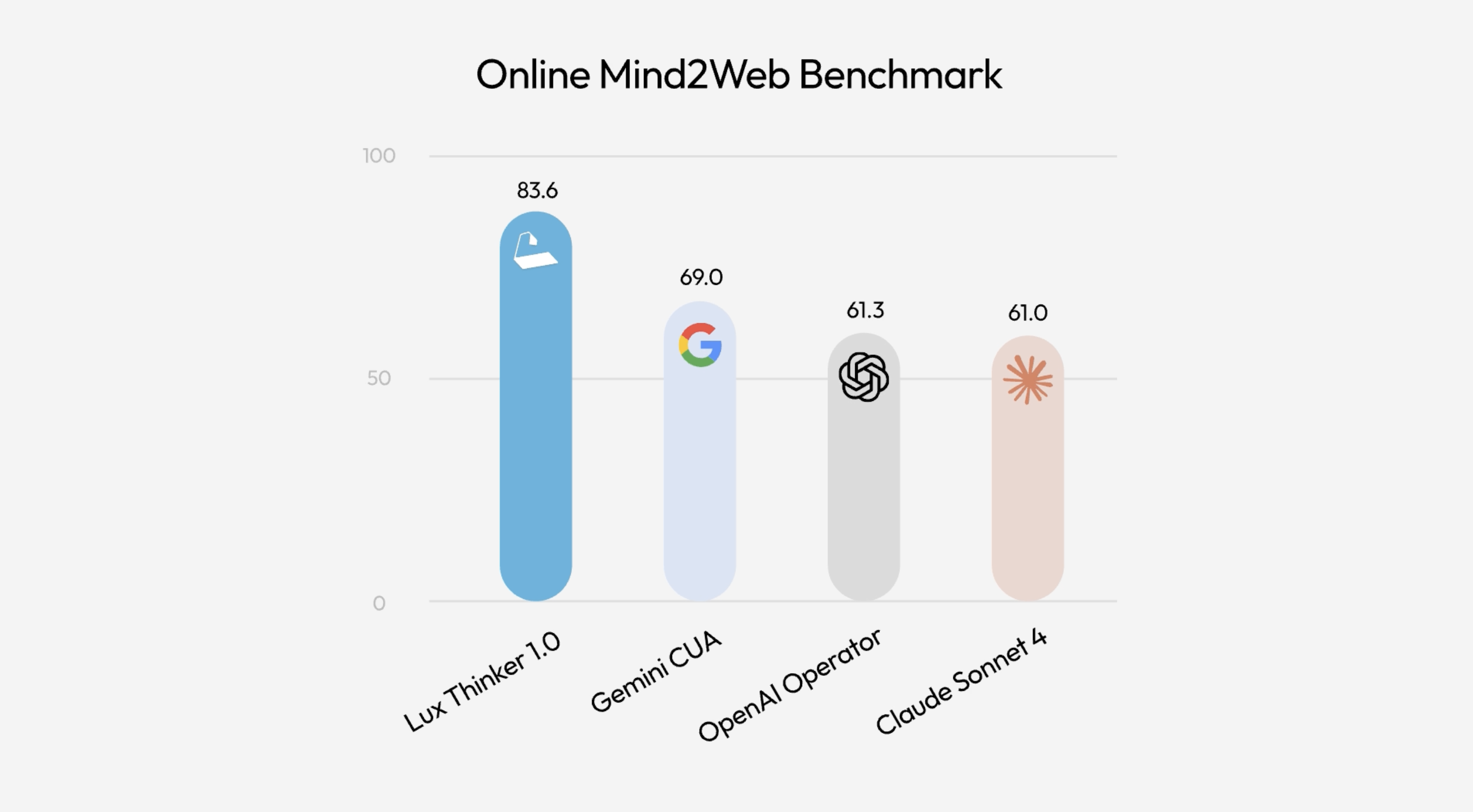

Our state-of-the-art model achieved a score of 83.6 on the Online-Mind2Web benchmark, which includes over 300 real-world, web-based computer-use tasks, outperforming models from leading AI labs including Google's Gemini CUA (69.0), OpenAI's Operator (61.3), and Anthropic's Claude Sonnet 4 (61.0).

Lux delivers more than just higher accuracy. Our model is also significantly faster and more cost-efficient than current computer-use models. Lux completes each step in just 1 second, compared to roughly 3 seconds for OpenAI's model, and it's 10x cheaper.

This combination makes Lux not only more capable but also far more practical for real-world deployment.

Developers, researchers, founders, and enterprises can now use the OpenAGI SDK to build performant, fast, and cost-efficient computer-use applications. Unlike browser-confined tools like ChatGPT Atlas, Lux can operate across any desktop application, making it a versatile tool and truly democratizing computer use for developers.

Lux enables a wide range of agentic applications: automation of software QA workflows, deep research, social media management, online store management, data entry, and bulk operations, to name a few.

Lux comes in three modes, allowing developers to choose the best fit for their needs:

Actor mode: Operates very quickly, about one second per step, and is well-suited for clearly specified tasks.

Thinker mode: Handles vague, multi-step goals by breaking them down into actionable tasks.

Tasker mode: Enables maximum control by accepting a Python list of steps, which it completes one by one, retrying until the task is finished.

A Novel Training Approach

We trained Lux using a fundamentally different approach from today's large language models (LLMs): a method called Agentic Active Pre-training. While pre-training is a critical stage in AI development, most LLMs are trained to passively absorb knowledge from the internet — like learning to drive by memorizing thousands of manuals without ever touching a steering wheel. In contrast, our agentic active pre-training allows the model to learn by doing, actively exploring digital environments and refining its skills through large-scale, real-world interaction. This approach also differs fundamentally from reinforcement learning in its mathematical optimization objectives, focusing on self-driven understanding and exploration rather than reward-based behavior correction.

Building an Open Ecosystem

At OpenAGI, we are committed to building an open ecosystem for computer use to benefit developers, researchers, and the entire AI community.

We have already open-sourced OSGym, the data engine and infrastructure used to train our agent models (see technical report and GitHub repository). OSGym is massively scalable and generalizes across a wide range of computer-use tasks, running thousands of OS replicas in parallel and generating over a thousand data points per minute. This foundation empowers other researchers to train and experiment with their own computer-use agents at scale.

Build powerful computer-use applications with our SDK — ship great stuff!

Join our Discord if you have any questions or would like to hang out with the computer-use community!

Take me to Lux SDK